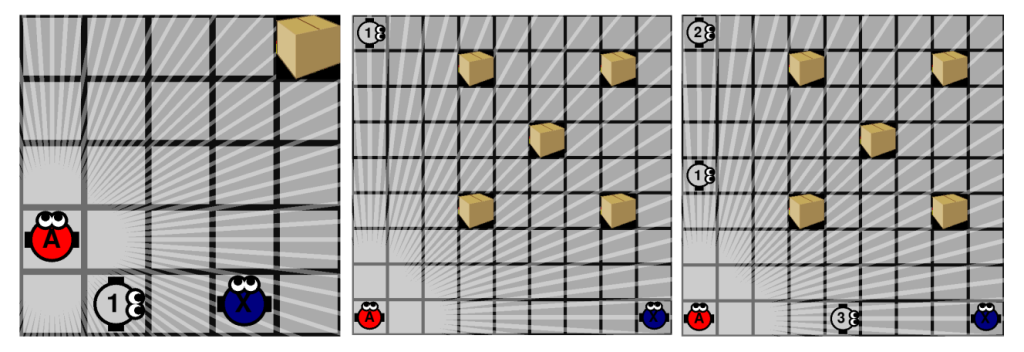

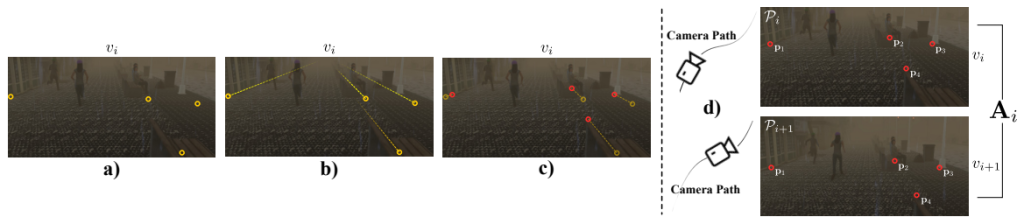

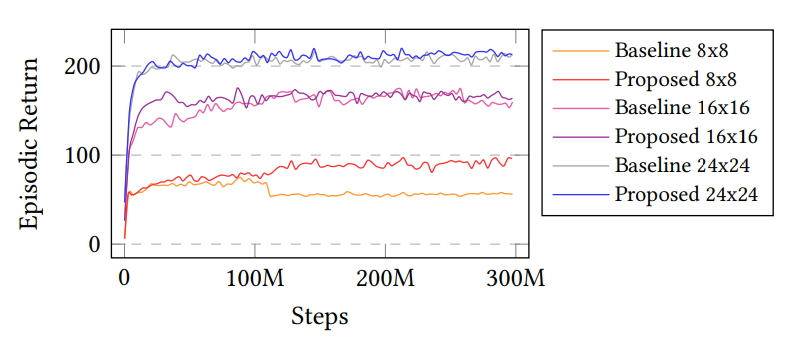

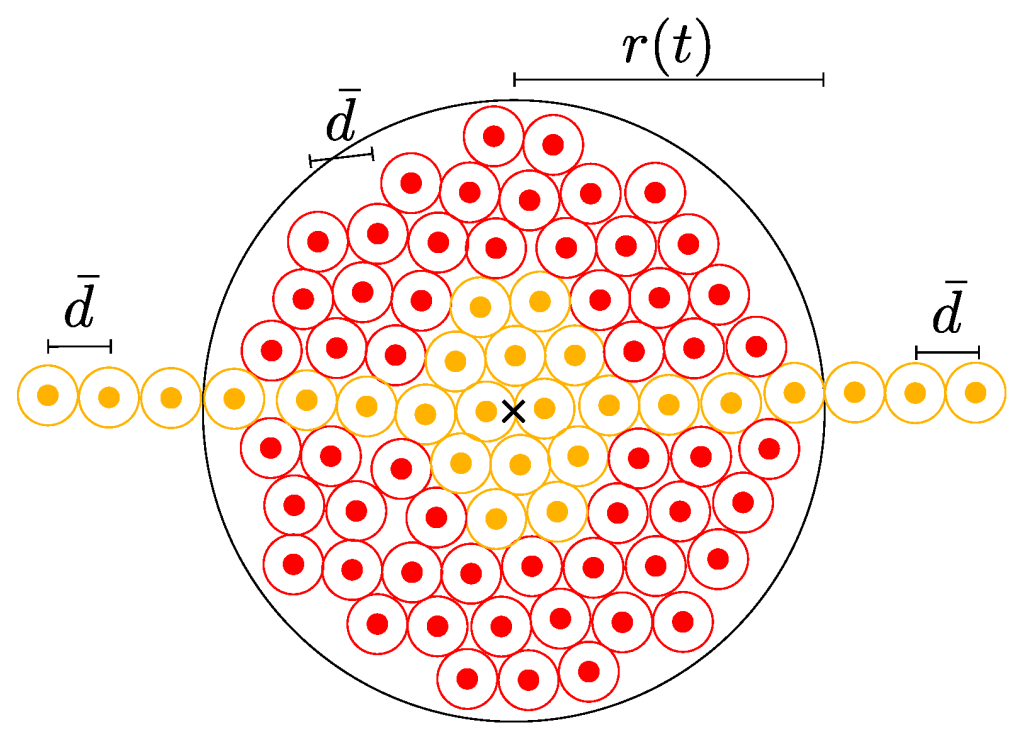

In our recent work, published at Mathematics, we present a combination of an experimental and mathematical study for estimating the task completion time of a swarm of robots. Our work is a fundamental step regarding how to perform approximations of swarm robotics global behaviour, when the individual robots are controlled by local interaction rules.

Our paper is freely available at https://www.mdpi.com/2227-7390/13/21/3552, and our source code is on GitHub.