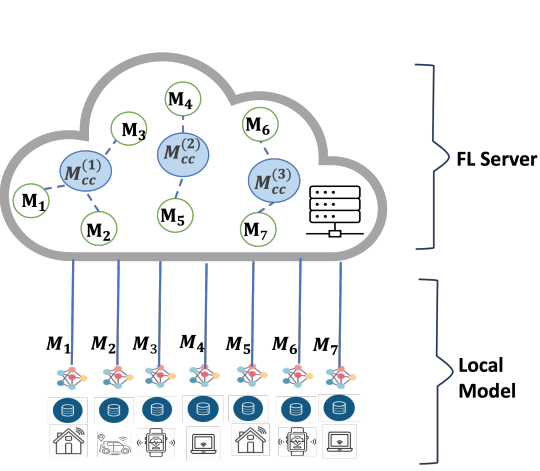

We presented at the European Conference on Artificial Intelligence (ECAI) the work “Robust Federated Learning Method against Data and Model Poisoning Attacks with Heterogeneous Data Distribution”. The work introduces a novel technique for defending against data and model poisoning attacks in federated learning, even when there is high data heterogeneity. The paper is available for free here, and the source code is available on GitHub.