The Computation and AI for Social Sciences Hub, or CAISS, is a DAIC-X initiative and part of the Defence AI Research Centre (DARe). CAISS itself is delivered by a joint team comprising academics at Lancaster University and Dstl staff.

CAISS recently ran a two day workshop at the University of Exeter, known as the CAISSathon, to develop ideas for research projects around Transparency and Explainability of AI systems and also touching on areas such as fairness. Another aim of the workshop was to help build a community of researchers working in this area.

It was attended by over 40 participants from Industry, Academia, Dstl and other government agencies. Remarkably only one person failed to attend and this was due to a personal issue.

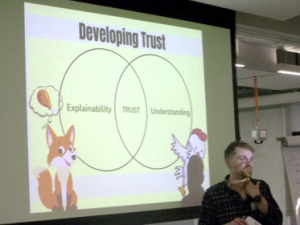

Grace Whordley, the Dstl CAISS lead, opened the event by providing an overview of CAISS and the workshop’s purpose. George Mason then introduced two guest speakers who gave opening talks. The first was given by Dr Ali Alameer from the University of Salford on Communicating Fairness in AI. This was followed by a talk given by Jordan, one of the Military Advisors, on Trust and Transparency – Utilising Artificial Intelligence within the Military context. Both talks received considerable interest and sparked interesting discussions.

Following these presentations the delegates were split into 7 teams, mixed to ensure they were diverse in terms of knowledge, organisation and expertise.

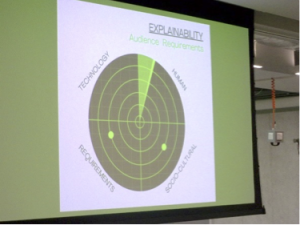

Each team then selected a pre-prepared challenge in the area of Transparency and explainability to develop. They could also have come up with their own idea, although some of the pre-prepared challenges were modified by some groups. An example challenge was:

Each team then selected a pre-prepared challenge in the area of Transparency and explainability to develop. They could also have come up with their own idea, although some of the pre-prepared challenges were modified by some groups. An example challenge was:

“Is it sensible, achievable, to try to apply explainability to all aspects of an AI based system? If not what aspect should it apply to and how can the developer be guided to identify those aspects?”

By the end of the first day each team gave a very brief summary stating the challenge they had chosen and the work they had done.

Day two started with a brief overview given by Dr Sophie Nightingale, who leads the Lancaster CAISS team, with a chance for people to ask any questions they may have had. This was then followed by a Red Teaming exercise where the teams paired up with one team, the Blue team, explaining what they had done so far and the other team, the Red Team, constructively challenged the approach, enabling the Blue team to reflect and respond to the challenges.

Red teaming:

Once each team had been Red teamed, they finalised their work and prepared short five minute presentations which were given at the end of the event. Some of these presentations were very professionally produced!

What next?

The Dstl CAISS team will now be summarising the outcomes which will hopefully influence future research and ideally will help the academic delegates prepare bids aimed at UK RI calls.

Feedback from the participants has been overwhelmingly positive with calls for a follow on in the future.

The event was only possible due to the sterling efforts of George Mason and Katie Baron – a big thanks is due to them.