Phase 2 invited participants (nine healthcare researchers, healthcare practitioners, ethics researchers and other

stakeholders) to a scheduled facilitated online workshop. This was a playful pilot workshop where participants were asked to join one of 2 teams ―a ‘generator’ or a ‘discriminator’― to imaginatively approximate the dialogic workings of a Generative Adversarial Network (GAN[1]) AI programme in a healthcare setting. Through interplay between these two teams and using as our basis the stories co-created in Phase 1, we deliberately moved away from questions of ‘what is an ethical AI’ by inviting participants to discuss and reflect on what is an ethical world and how we can build/imagine/reconfigure ethical technological worlds within a healthcare context.

How did we play with the stories in the workshop?

Establishing context

We began the workshop by establishing a fictional context for the workshop activity. We explained that we were all part of an AI GAN algorithm called the Ethical World Making Machine developed by the UK National Health Service (NHS), Service Futures division. The machine’s purpose was to inform design of patient care, products and services for this fictional division by identifying whether or not stories processed by the machine represent an ethical world. We said the stories we process came from audio recordings of conversations about health taken in hospital canteens, waiting rooms, surgeries and other health settings. Participants were asked not to question the ethics of recording private conversations without obtaining consent. Of course, in truth, the stories had already been written by participants in Phase 1. We went on to explain that usually the machine runs very efficiently but…last night, there was a power surge and the stories were scrambled. We tried our best to piece them back together but the result was muddled in places. From here, we ran the activity.

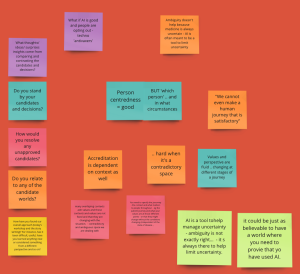

Overview of Miro board for workshop

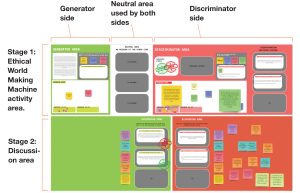

The rest of the workshop was divided into 2 stages: 1. The Ethical World Making Machine activity, and; 2. a group discussion in plenary reflecting on the activity and any emerging insights. These 2 stages were supported by real-time collaboration on Miro boards in parallel to conversation on Zoom. We used four identical Miro boards, one for each of the stories created in Phase 1. The image below is an overview of the Miro board for Story 2 demonstrating the area for both stages of the workshop. In stage 1, the participants were separated into 2 teams—Generators and Discriminators. Each team was facilitated in a separate breakout room and instructed to progress through each board in a series of 5-minute rounds. The green side was used by the Generators, the red by the Discriminators. Participants were instructed not to look at the other team’s side of the board throughout the activity. The white ‘Neutral’ area was used by both teams.

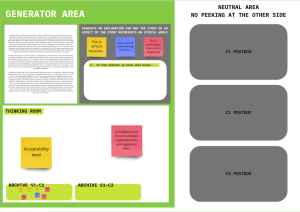

Stage 1: the Generators’ activity

Beginning with the first Miro board and story, in each 5-minute round, the Generators read the story. Using the yellow, blue and pink ‘sticky notes’ as prompts, they next discussed how the world this story (or aspects of it) represented an ethical world. They used the Thinking Room to take notes from the discussion. They would then use the first candidate card to capture and synthesise the discussion in the form of a short, affirmative candidate explanation of how the world the story is in is ethical and ‘post’ it in the first postbox in the neutral area for the Discriminators to pick up and approve or deny without explanation during the activity. In the meantime, the Generators moved on to repeating this task with the next story. Once they had finished all 4 stories, they would return to each one in order producing a new candidate wherever a previous candidate had been denied. The facilitators used the Thinking Room archives to file notes from discussion of candidates in previous rounds. The goal for the actvity was to get a candidate for at least one story approved.

Stage 1: the Discriminators activity

Beginning a 5-minute round after the Generators had completed Candidate 1 for Story 1, the Discriminators took the candidate from the neutral area to approve or deny it as a description of an ethical world without viewing the original story. They moved the candidate to the postbox in their area. Using the yellow, blue, pink and green ‘sticky notes’ as prompts, they next discussed grounds for approval or denial, and took notes from the discussion in the Thinking Room. They next decided to approve or deny the candidate, placing it back in the neutral area with a corresponding stamp, and used and filed a decision history card to capture and synthesise the discussion in the form of a short, affirmative statement explaining their rationale for approval or denial. These cards were not shared with the Generators until the end of the activity. For stories with more than one candiate, the facilitator filed notes from discussion on previous candidates in the Thinking Room archives.

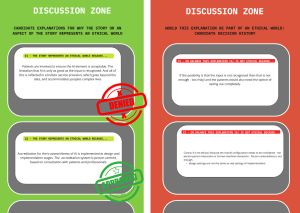

Stage 2: plenary discussion

For the plenary discussion in stage 2, the Generators’ approved and denied candidates for an ethical world and the Discriminators’ decision history cards were pulled down into the discussion zone to be read side by side for the first time. Finally, using the purple, blue, pink and orange ‘sticky notes’ as prompts, the group discussed and noted their reflections on the activity and their perspectives and insights on ethical configuration of AI in healthcare informed by a combination of their existing knowedge and experiences of the workshop

[1] A Generative Adverserial Network (GAN) is a framework for machine learning, designed by Ian Goodfellow in 2014, in which learning to create authentic seeming content, and in particular, images, is done by two neural network -Generators and Discriminators- engaging in a zero-sum game. In this game, the Generator produces thousands of ‘candidates’ from random input which it sends to the Discriminator in an attempt to fool it. Throughout this interplay, both networks are continually updated.